When 1-bit is enough for LLM

During execution, LLMs are loaded into the memory. 7B models take a lot of space - 21.33GB. The reason is that every parameter is stored as float16 type. We can save a lot of space by reducing the precision of each parameter. This is exactly what quantization does. Quantization changes the precision of every parameter, and as a result, the model itself becomes a lot lighter. Quantization is not a free lunch; we trade quality for speed. But how small can each parameter be in order to maintain an acceptable level?

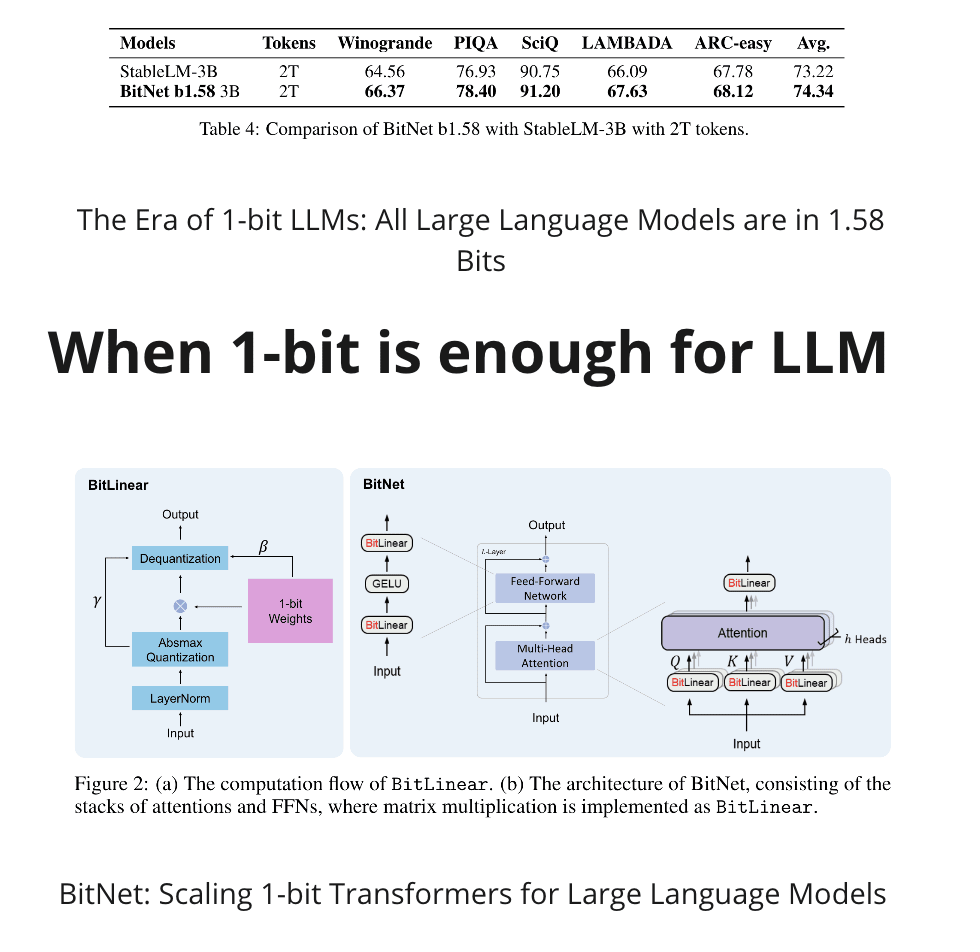

BitNet proposed an interesting approach - train a LLM with reduced precision. Quantization happens after training when we ready to do inference. BitNet happens during training. To implement BitNet we need to replace Liner layers in Transformer architecture with BitLinear layers.

BitLinear works as a Linear layer but with 1-bit weights. This reduces memory footprint and improves computational efficiency. It's worth noticing that BitNet does dequantization when execution moves from one layer to another, so it means that other components stay high-precision.

Ideas of BitNet were further developed in the BitNet b1.58 model. The new model operates with {-1, 0, +1}. 0 was added to the list. If BitNet was compared in performance to quantized versions of LLMs, BitNet b1.58, on the other hand, matches in performance to the full-precision LLMs.

The interesting aspect of these papers is the reduced computational requirements. Inside LLM, we do millions of matrix multiplications. To multiply a matrix(weights) by a vector(input), we must do multiplication and addition. If your matrix consists of only {-1, 0, 1}, you can easily replace multiplication with addition only. Results show that energy efficiency was improved by 18-40 times depending on the model size.

The idea of using bit precision can be extended beyond the Linear layer only. There are open-sourced implementations for almost all modern approaches - BitMoE, BitMamba, BitLora, BitAttention etc.

References:

https://arxiv.org/abs/2310.11453 - BitNet: Scaling 1-bit Transformers for Large Language Models

https://arxiv.org/abs/2402.17764v1 - The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits

https://github.com/microsoft/unilm/blob/master/bitnet/The-Era-of-1-bit-LLMs__Training_Tips_Code_FAQ.pdf - The Era of 1-bit LLMs: Training Tips, Code and FAQ

https://github.com/kyegomez/BitNet - Implementation of "BitNet: Scaling 1-bit Transformers for Large Language Models" in pytorch