Use computers like it's 1972, but this time with LLMs

Anthropic just presented a computer use model. The idea is to extend the AI Agents beyond REST API calls and give access to the local computer so that the model can control mouse movements and understand the currently opened programs by looking at screenshots. By doing all of this, a user can delegate some routine tasks to the model.

When we are talking about building a new specialized model in GenAI space, there are three questions we need to answer. The first is whether we want to reuse an existing model or build a new one. The second is whether we have a proper dataset to train our model. Lastly, do we have a proper benchmark?

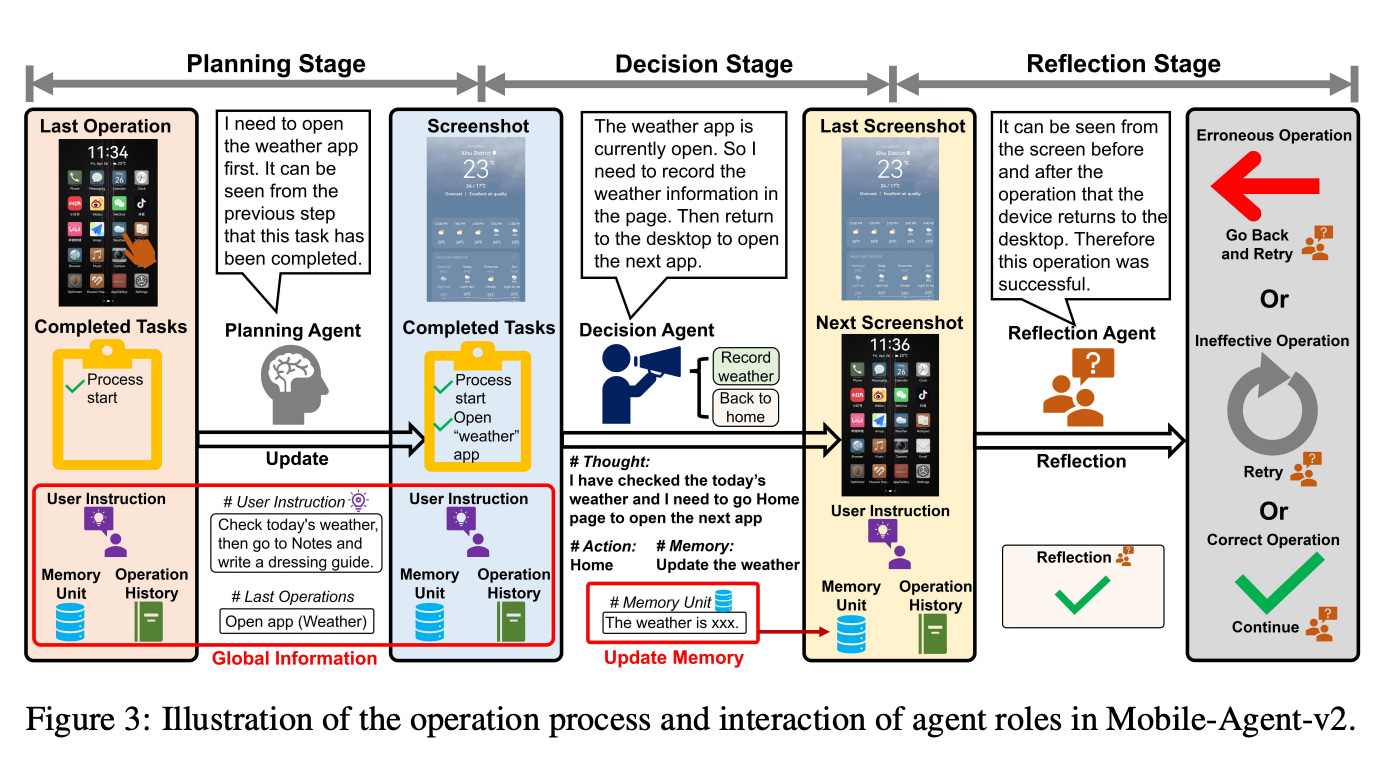

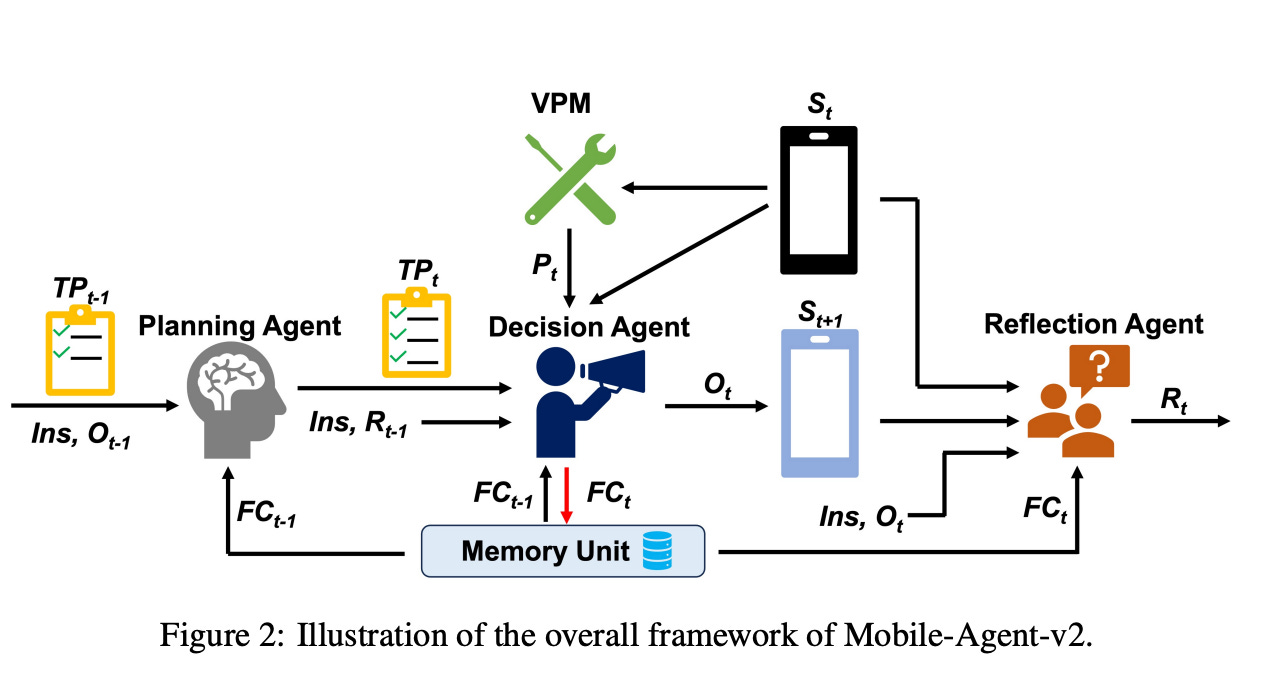

The Mobile-Agent-v2 paper shows how to reuse existing models and build your own assistant. As an environment, it uses mobile phones. The architecture consists of a Visual Perception Module(provides screen recognition capabilities, such as text, icon recognition and description), a Memory Unit, a Planning Agent(summarizes the history and tracks progress), a Decision Agent(generates operations and updates memory) and Reflection Agent(observes the state before after operation). GPT-4 modules are used for Planning, Decision and Reflection Agents. ConvNextViT-document, GroundingDINO and Qwen-VL-Int44 models are used in the Visual Perception Module for OCR, icon recognition and description.

Before building our own model, we need a good training dataset. The MultiUI dataset is exactly what we might need. It contains 7.3 million samples from 1 million websites. The dataset consists of multimodal instructions generated by LLMs from accessibility trees. These instructions cover understanding and reasoning(explain the webpage, name of the author and etc.), text recognition(OCR), visual grounding(find an element on the page).

There is a unique problem for a computer model - precise location detection of UI elements on the screen, including icons. The Ferret-UI paper shows how to build a model with such requirements. Internally, the Ferret-UI model contains a pre-trained visual encoder and a Vicuna language model. It accepts raw screen pixels as input, which allows the model to detect even small objects on the screen. This is a difference from other models, which require low-resolution images. The model training took 3 days and 250K samples from the training dataset.

Now the hardest part - prove that your solution is worth attention. For computer use models, OSWorld is one of the best benchmarks today, even though humans accomplish only 72% of the tasks. The benchmark contains 369 computer tasks. The most important part is that OSWorld provide simulation infrastructure(executable environment), which is built using virtual machine software. The evaluation process starts with sending screenshots, an accessibility tree and terminal output to the agent. Agent sends back instructions such as click(5, 7), which means mouse click and coordinates. Once instructions are executed, the evaluation scripts check the result. The actual state of a virtual machine is examined, not the agent's instructions.

A few observations. The accessibility tree plays a key role in agents' training and reasoning. Low-resolution images of UI screens significantly reduce the quality of models. It's important to check the final state of the machine rather than the execution flow from the agent. It will help to train agents to solve cases when a sudden pop-up window hides half of the window. A new model of safety is needed when agents have access to a mouse and keyboard. Event the best model currently gets only 14.9% in OSWorld.

It is interesting that in 1972, Xerox showed a vision of the office of the future. From a technical perspective, they show a lot of cool stuff, including GUI, mouse, and voice control. Basically, it predicated the half-century development of the computer industry. We've eventually added maybe the most complex piece from Xerox's vision.

References:

YouTube - Xerox Parc - Office Alto Commercial from 1972

https://arxiv.org/abs/2404.05719 - Ferret-UI: Grounded Mobile UI Understanding with Multimodal LLMs

https://arxiv.org/abs/2406.01014v1 - Mobile-Agent-v2: Mobile Device Operation Assistant with Effective Navigation via Multi-Agent Collaboration

https://arxiv.org/abs/2410.13824 - Harnessing Webpage UIs for Text-Rich Visual Understanding

https://www.anthropic.com/research/developing-computer-use - Developing a computer use model

https://arxiv.org/abs/2404.07972 - OSWorld: Benchmarking Multimodal Agents for Open-Ended Tasks in Real Computer Environments

https://spectrum.ieee.org/xerox-alto - 50 Years Later, We’re Still Living in the Xerox Alto’s World