The GenAI world can be tricky to understand. Numbers add a new perspective.

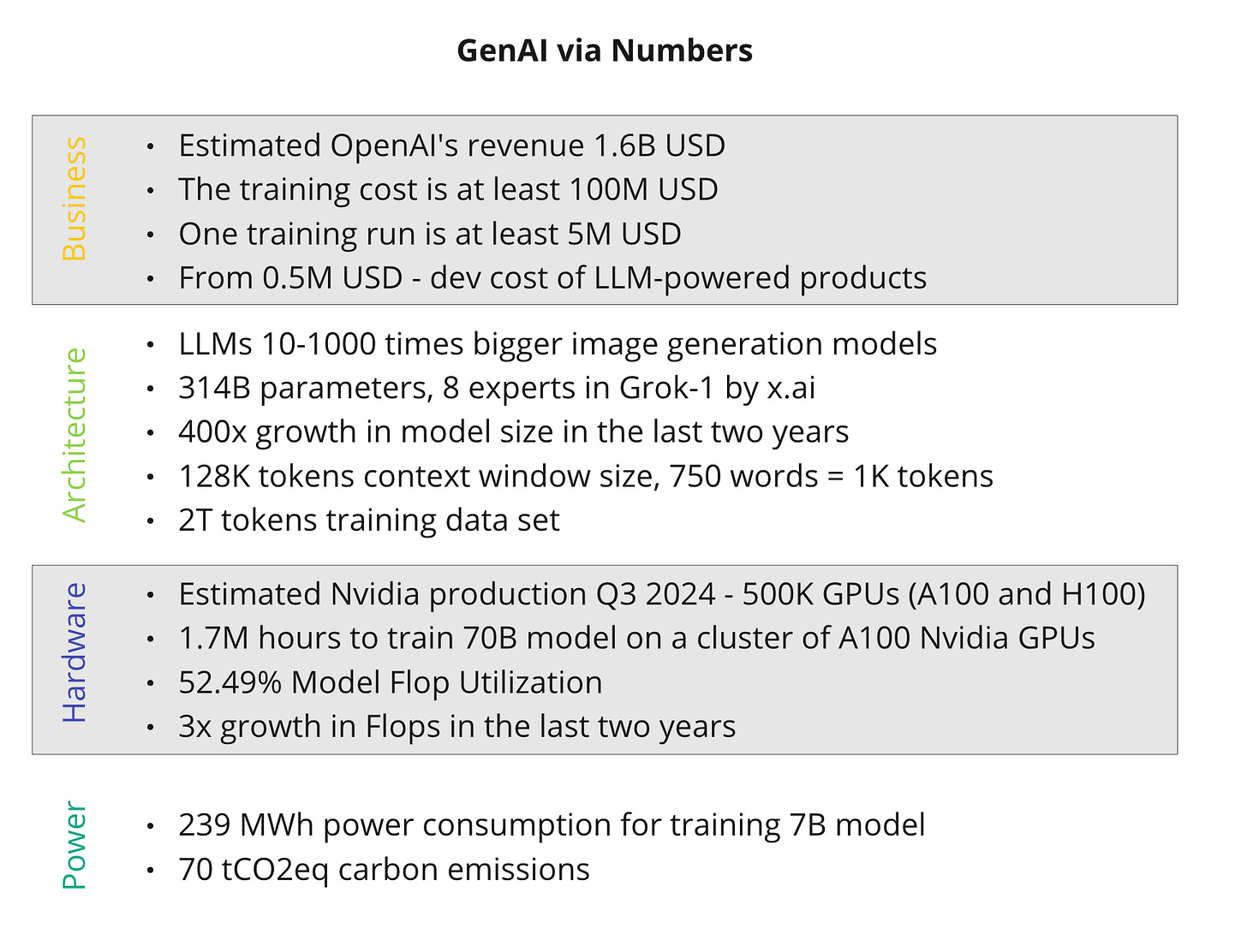

To run or train a LLM, one needs GPUs—a lot of them, in fact. The two main buyers of Nvidia GPUs in Q3 of 2023 were Meta and Microsoft. Each of them bought 150K GPUs. The estimated number of GPUs Nvidia sold for that period is 500K, which makes Meta and Microsoft responsible for 60% of it.

In the case of Meta, we know that 1.7M hours were used to train the Llama2 70B model. There was 2x growth in training time when Meta switched from the Llama to the Llama2 model. The leader here is PaLM-540B, with 8.8M hours.

Interestingly enough, during training, GPUs are not utilized 100%. 50% utilization is an average number across the industry. Meta has shared its numbers—52.49% Model Flop Utilization. In the last two years, we saw a 400x scale in the model size, with Flops scaling for 3x and memory operations scaling for 1.4x only. Memory operations might be a big issue in scaling LLMs.

The developers behind the OLMo model shared their numbers regarding power consumption: The 7B model consumed 239 MWh, or 478 refrigerators running for a whole year, and carbon emissions were—70 tCO2eq. All of these numbers highly depend on data centers and hardware. For example, in carbon-neutral data centers, emissions are 0.

The models themselves are well-defined by two primary numbers. The first is the model size, usually part of the model name. The exciting aspect here is comparing LLM to image generation models. Stable Diffusion has about 0.89B parameters, where the smallest Llama 2 is 7B. This shows us that building language models is way more complex. And the size has been continuously growing, 400x for the last two years.

The second parameter is context size, which defines how much data we can send to LLM. It can vary from 4K to 1M tokens. GPT-4 Turbo has 128K context. 1,000 tokens are about 750 words in English.

Creating LLMs requires a lot of capital. The exact number is unknown, but we can safely assume that it starts at 50M USD. At the same time, revenue estimations of big players are also quite good. Anthropic projects earnings of 850M USD this year, and OpenAI, on the other hand, has around 1.6B annual revenue.

Building solutions on top of LLMs is also expensive. A software team's total cost of ownership for experimenting with and delivering products on top of LLMs can be 0.5M to 2.0M USD. For example, building a customer chatbot might require a team of 8 developers working for 9 months.

If you are interested in more numbers and trends, please look at - https://epochai.org/.

Resources:

https://www.tomshardware.com/tech-industry/nvidia-ai-and-hpc-gpu-sales-reportedly-approached-half-a-million-units-in-q3-thanks-to-meta-facebook - Nvidia sold half a million H100 AI GPUs in Q3 thanks to Meta, Facebook — lead times stretch up to 52 weeks: Report

https://www.truefoundry.com/blog/deploy-and-finetune-llama-2-on-your-cloud - Llama 2 LLM: Deploy & Fine Tune on your cloud

https://medium.com/codenlp/the-training-time-of-the-foundation-models-from-scratch-59bbce90cc87 - The training time of the foundation models (from scratch)

https://medium.com/riselab/ai-and-memory-wall-2cb4265cb0b8 - AI and Memory Wall

https://arxiv.org/abs/2402.00838 - OLMo: Accelerating the Science of Language Models

https://arstechnica.com/gadgets/2023/04/generative-ai-is-cool-but-lets-not-forget-its-human-and-environmental-costs/ - The mounting human and environmental costs of generative AI

YouTube - KGC23 Keynote: The Future of Knowledge Graphs in a World of LLMs — Denny Vrandečić, Wikimedia

YouTube - Yann LeCun - A Path Towards Autonomous Machine Intelligence

https://github.com/xai-org/grok - Grok-1

https://www.theinformation.com/articles/anthropic-projects-at-least-850-million-in-annualized-revenue-rate-next-year - Anthropic Projects At Least $850 Million in Annualized Revenue Rate Next Year

https://www.theinformation.com/articles/openais-customers-consider-defecting-to-anthropic-google-cohere - OpenAI’s Customers Consider Defecting to Anthropic, Microsoft, Google

https://www.forbes.com/sites/craigsmith/2023/09/08/what-large-models-cost-you--there-is-no-free-ai-lunch/ - What Large Models Cost You – There Is No Free AI Lunch

https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/technologys-generational-moment-with-generative-ai-a-cio-and-cto-guide - Technology’s generational moment with generative AI: A CIO and CTO guide

https://epochai.org/ - Epoch is a research institute investigating key trends and questions that will shape the trajectory and governance of AI