The dust is settling, and now we can see the best planning practices for autonomous agents in the LLM world. One of the key elements of an AI agent is to do planning. The idea of planning is to convert the user's request to something that can be executed by the agent. The better we plan, the better the result we can expect to receive. Several papers have been shared on this subject in the last few months.

In short, we need to follow these principles: Have a formal description, Generate and test, Use loops, Have a domain expert on your side, and Do not rely solely on LLMs. Let's say we need to create a travel agent. The agent will be responsible for preparing a trip plan and booking tickets. How do we build the agent based on the principles above?

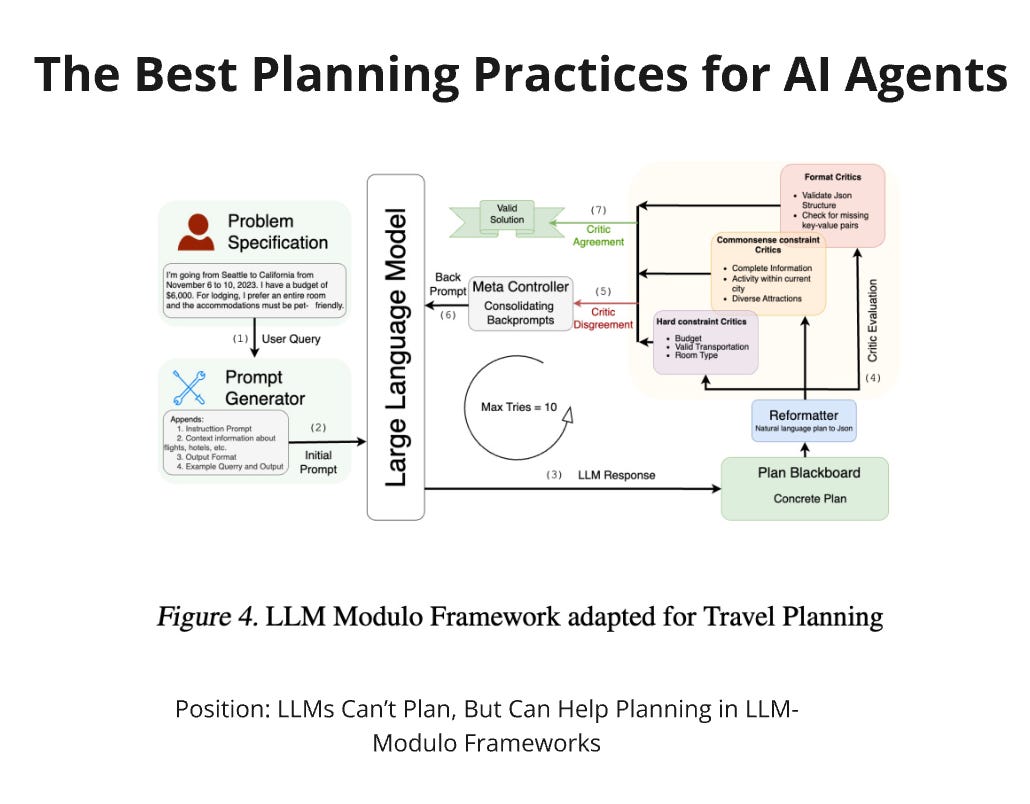

First, we need to understand what a plan is. It can be JSON or pseudo code. The most important thing is that the plan must follow some formal schema. This will allow us to validate the result of a generation immediately. Schema validation is the second part of the 'Generate and test' principle. However, we can use simulation, unit testing, or LLM criticism to do a proper test. Once we have the test result, we can decide whether we are ready to execute or if the plan has some issues. In the last case, we need to repeat the generation step. This time, we add the plan and validation results to the prompt for LLM. Usually, it's enough to do up to 10 loop iterations to receive a proper plan or tell the user that we can not fulfil the request.

Second, our end goal is to provide a user with value. In our hypothetical example, a travel agent is a domain expert. Domain experts can tell us what a good trip plan is and what aspects we should include in our prompts. Moreover, experts know insights and best practices in a domain. As a result, they can provide a rule-based schema for solving edge cases. If we can solve a problem with an if statement, it's much better than making a call to ChatGPT.

A few words about validation: In the recent paper 'LLM Critics Help Catch LLM Bugs,' OpenAI highlighted how they built a Python code critic. The result is that a critic performs better reviews than experts or ChatGPT. A simulation environment can be even better because it gives us a chance to provide the user with new information. Only after the simulation can we update the user on the remaining bank account balance.

As described in 'Position: LLMs Can't Plan, But Can Help Planning in LLM-Modulo Frameworks', critics can check soft and hard constraints, providing users with an unoptimized but still good plan. Sometimes, we can do an LLM call to refine the initial request. If a user sends just a few words as a task - I want to visit a City - we can ask LLM to add the most common requirements to the request. Having a plan storage helps to reduce execution time drastically. I didn't mention about fine-tuning or training a new LLM model. OpenAI created a separate model for critics. In most cases, a few-shot prompting can be enough for a start. The last thing is to review your plan schema. If you see a potentially dangerous command for the user, request approval before executing the generated plan.

References:

https://arxiv.org/abs/2402.01817 - Position: LLMs Can’t Plan, But Can Help Planning in LLM-Modulo Frameworks

https://arxiv.org/abs/2407.00215 - LLM Critics Help Catch LLM Bugs

https://arxiv.org/abs/2404.13050 - FlowMind: Automatic Workflow Generation with LLMs

https://arxiv.org/abs/2402.18679 - DATA INTERPRETER: AN LLM AGENT FOR DATA SCIENCE