Reducing hallucinations in LLMs using probabilities

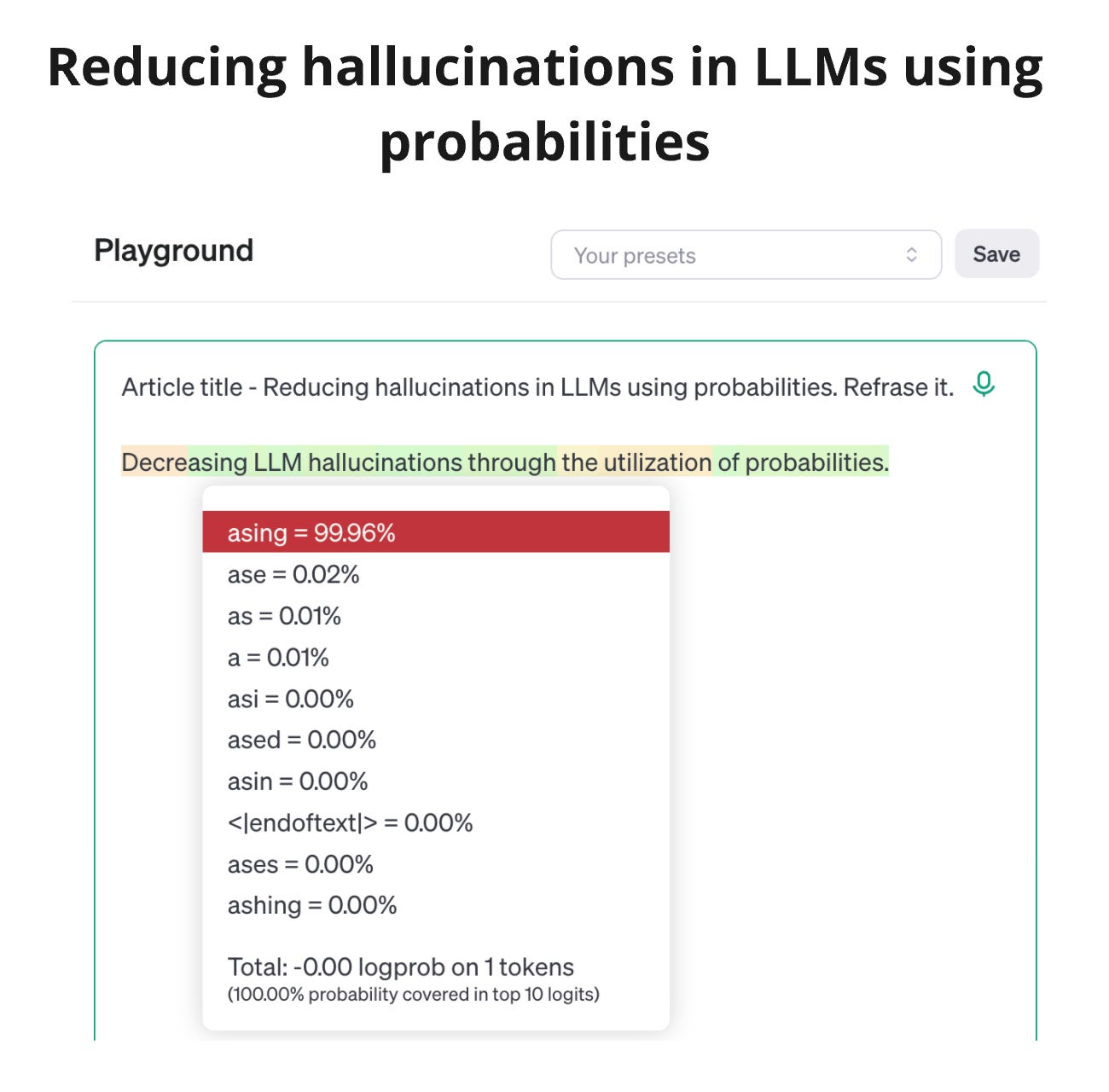

One of the issues of the wider adoption of LLMs is hallucinations when LLM generates a response with wrong facts in the answer. A user loses trust at this moment, and developers need to fix this. Many approaches use static or predefined rules, such as React or CoT, to deal with hallucinations. However, models via API also return probabilities for each token besides text in the response. These probabilities are numerical representations of how sure the model is in the answer. When a LLM returns a token with low probability, it's a sign of potential hallucination.

The 'Active Retrieval Augmented Generation' propose a solution of a RAG system based on tokens' probabilities. The solution is called Forward-Looking Active REtrieval augmented generation (FLARE). Don’t be confused by Faithful Logic-Aided Reasoning and Exploration (FLARE), which is a completely different RAG approach.

The FLARE focuses on when and what to retrieve during generation. This means that we need to call for external information only when we are sure that the model does not have enough knowledge. We can check this by looking at the probabilities of generated tokens. By requesting external information, we need to request exactly what the model needs. This is achieved by generating a temporary next sentence, which will be used as a query for external information.

FLARE starts by retrieving an initial set of documents to generate the first sentence. The second sentence is generated as temporary. We analyze probabilities in the second sentence. If probabilities are high, we accept it and generate the next temporary sentence. If the probabilities are low, we send a search query for external documents. With a new set of external documents, we generate the second sentence again. The process ends when the model stops producing new tokens.

It's interesting that this approach can be extended to a few sentences and even paragraphs and can also be used to focus on specific parts of the sentence. The 'Mitigating Entity-Level Hallucination in Large Language Models' leverages the approach for named entities.

The paper proposes Dynamic Retrieval Augmentation based on hallucination Detection (DRAD). It works by splitting a sentence into entities and calculating the probability and entropy for each entity. If these values are below the threshold, the entity is marked as hallucinated and requires replacement. The text before the hallucination is safe, so we need to replace only starting from the first identified hallucination. At this point, the self-correction based on external knowledge (SEK) algorithm executes a search query and replaces the entity in question with the correct value. If LLM generates 'Albert Einstein was born in Berlin, Germany', then DRAD will detect that Berlin is a hallucination, and SEK will replace it with Ulm.

The last example of this approach is DRAGIN - Dynamic Retrieval Augmented Generation based on the Information Needs of Large Language Models. The key point of the framework is - Real-time Information Needs Detection (RIND). RIND's task is to understand when and what is needed to produce the result. Compared to the previous methods, it also uses a self-attention mechanism. Attention weights are used to detect the most important parts of the sentence, and, as a result, full access to the model is required. I've previously mentioned why this works in https://shchegrikovich.substack.com/p/what-do-all-these-layers-do-in-llm, when describing retrieval heads in LLMs.

All of these technics triggers a call for external documents at ideal timing, when the information is really needed.

References:

https://arxiv.org/abs/2407.09417 - Mitigating Entity-Level Hallucination in Large Language Models

https://arxiv.org/abs/2305.06983 - Active Retrieval Augmented Generation

https://arxiv.org/abs/2403.10081 - DRAGIN: Dynamic Retrieval Augmented Generation based on the Information Needs of Large Language Models

https://cookbook.openai.com/examples/using_logprobs - Using logprobs