Never stop reasoning

LLM reasoning has a promise for developers - you don't need to think about every possible input as in traditional programming, where we handle all potential inputs from the user. The idea is to give LLM instructions on what it needs to do, and it will behave accordingly. Reasoning capabilities define what LLM can solve. The most popular technique today is a chain of thoughts, but researchers never stop thinking of better reasoning methods.

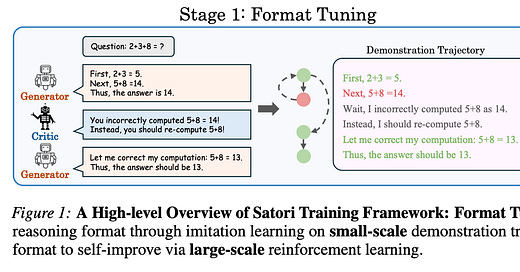

The new method 'Chain-of-Action-Thought' (COAT) is proposed in the Satori paper. The technique enhances ideas of CoT by adding meta-action tokens: continue reasoning, reflect and explore alternative solutions. These meta-action tokens are produced by LLM and help it navigate the reasoning process. In other words, meta-action tokens add self-reflection and self-exploration capabilities to the reasoning process. The continue reasoning token tells the model to generate the next step by continuing the current reasoning path. Reflect asks the model to verify previous steps. Explore token asks the model to identify mistakes in reasoning and generate a new solution.

Because COAT introduced new tokens, we need to train the model first to learn about these tokens. The training process consists of two steps: format tuning and RL. The format tuning step is done via fine-tuning existing LLM to learn new tokens. This step uses a multi-agent data synthesis framework for preparing training data. The second step helps the model enhance autoregressive search capability; in other words, it helps to learn about self-reflection and self-exploration.

An alternative approach is proposed by the Path-of-Thoughts (Pot) framework. The framework promises to reduce hallucinations. It consists of three modules: graph extraction, path identification and reasoning. In the first step from the input prompt, we extract nodes and edges by finding entities, their relationships and attributes. The result of the first step is a cognitive map. The next step builds a list of reasoning paths. A reasoning path is related to the input query relation between two nodes. The reasoning step can be a simple LLM call with CoT prompting or a symbolic reasoner. The decision of which reasoning module to use is based on rules.

The COAT method proposes using unique tokens to navigate reasoning, which requires a new, fine-tuned model. The PoT framework, on the other hand, suggests leveraging existing LLMs but changing how we prompt them and adding additional steps. The CODEI/O paper shows how to use specifically crafted training data to teach the model better reasoning.

Instead of showing examples of good reasoning, we can teach the model to predict input and outputs from code examples. For instance, we can take a Python code and ask the model what it returns. By doing so, the model will learn basic reasoning primitives: "logic flow planning,state-space searching, decision tree traversal, and modular decomposition."

All three papers claim better results than existing solutions. The most crucial part is that all these methods are orthogonal to each other, which means they can be used together.

References:

Paper: CodeI/O: Condensing Reasoning Patterns via Code Input-Output Prediction - https://arxiv.org/abs/2502.07316

Paper: Satori: Reinforcement Learning with Chain-of-Action-Thought Enhances LLM Reasoning via Autoregressive Search - https://arxiv.org/abs/2502.02508

Paper: Path-of-Thoughts: Extracting and Following Paths for Robust Relational Reasoning with Large Language Models - https://arxiv.org/abs/2412.17963