LLMs in highly specialized domains

General-purpose LLMs are not always capable of properly answering questions in highly specialized domains such as Chemistry, Math, Finance, Biology, etc.. Still, there are some ways to create LLMs with knowledge of these domains.

The first issue is a dataset. When LLM is trained, it uses a well-curated but still limited dataset. If someone needs an LLM for finance, the dataset must contain much financial information, which is not always true for general-purpose LLM. To solve this problem, we can use the pre-trained model and fine-tune it to the financial data. That's what has been done for InvestLM. InvestLM was created by fine-tuning LLaMA-65B on data from StackExchange QFin, CFA questions, SEC filings, and Financial NLP tasks. That's the first option for creating a domain-specific LLM - fine-tuned pre-trained model using a high-quality instruction dataset.

The problem can be even more complicated if a domain has a unique way of representing information, such as geometry. To solve geometry tasks, you need to provide a proof. That's what the team from DeepMind decided to do with the AlphaGeometry model. LLMs are bad at generating proofs or symbolic reasoning. That's why a new formal language for writing geometry proofs was developed. This language can be verified as any other programming language and is easy to read for humans. After that, the team behind AlphaGeometry generated 100M synthetical records in the new language to train the model. That was the second option - use existing transformer architecture, create a new language to convert the domain into something LLM can learn, and train on synthetical data.

If we compare the results of the first(InvestLM) and the second (AlphaGeometry) option, we will see that InvestLM performs similarly to closed-source models. At the same time, AlphaGeometry outperforms the previous best method. Also, AlphaGeometry was trained without human feedback.

We don't always need to create a new formal language; ChemLLM is a good example. In chemistry, there is a special notation (SMILES) for representing molecules and IUPAC for naming things. The papers show how to train a domain-specific LLM when there are industry standards for representing information.

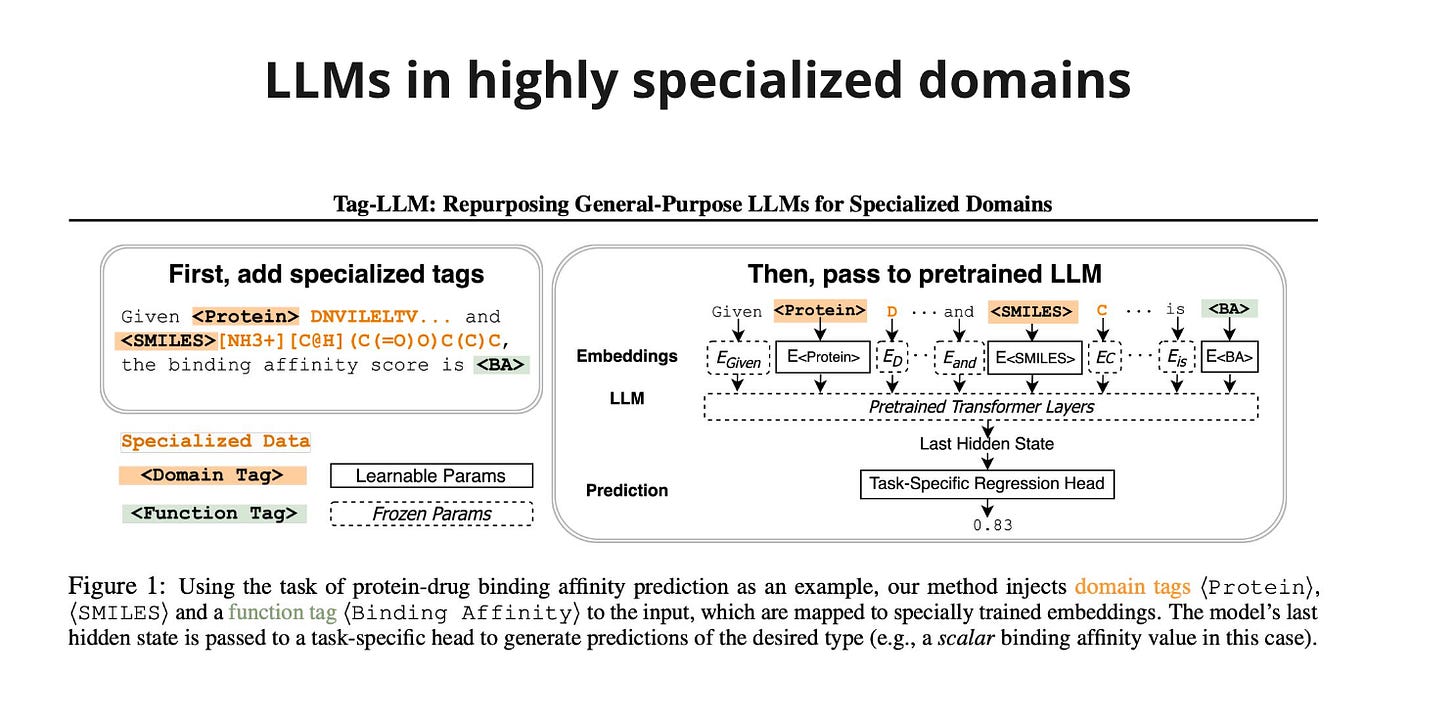

It seems that the idea of creating domain-specific LLMs can be scaled. The Tag-LLM paper provides an algorithm for repurposing LLM in any domain. For any new domain, we need to specify domain tags(used to mark domain-specific representations of information, such as SMILES) and function tags(used to mark domain-specific tasks, such as predicting molecular properties). The next step is to use a three-stage protocol to learn these tags using auxiliary data and domain knowledge.

Resources:

https://arxiv.org/abs/2309.13064 - InvestLM: A Large Language Model for Investment using Financial Domain Instruction Tuning

https://www.nature.com/articles/s41586-023-06747-5 - Solving olympiad geometry without human demonstrations

https://arxiv.org/abs/2402.06852 - ChemLLM: A Chemical Large Language Model

https://arxiv.org/abs/2402.05140 - Tag-LLM: Repurposing General-Purpose LLMs for Specialized Domains