LLM - from the next token prediction to meaningful answers

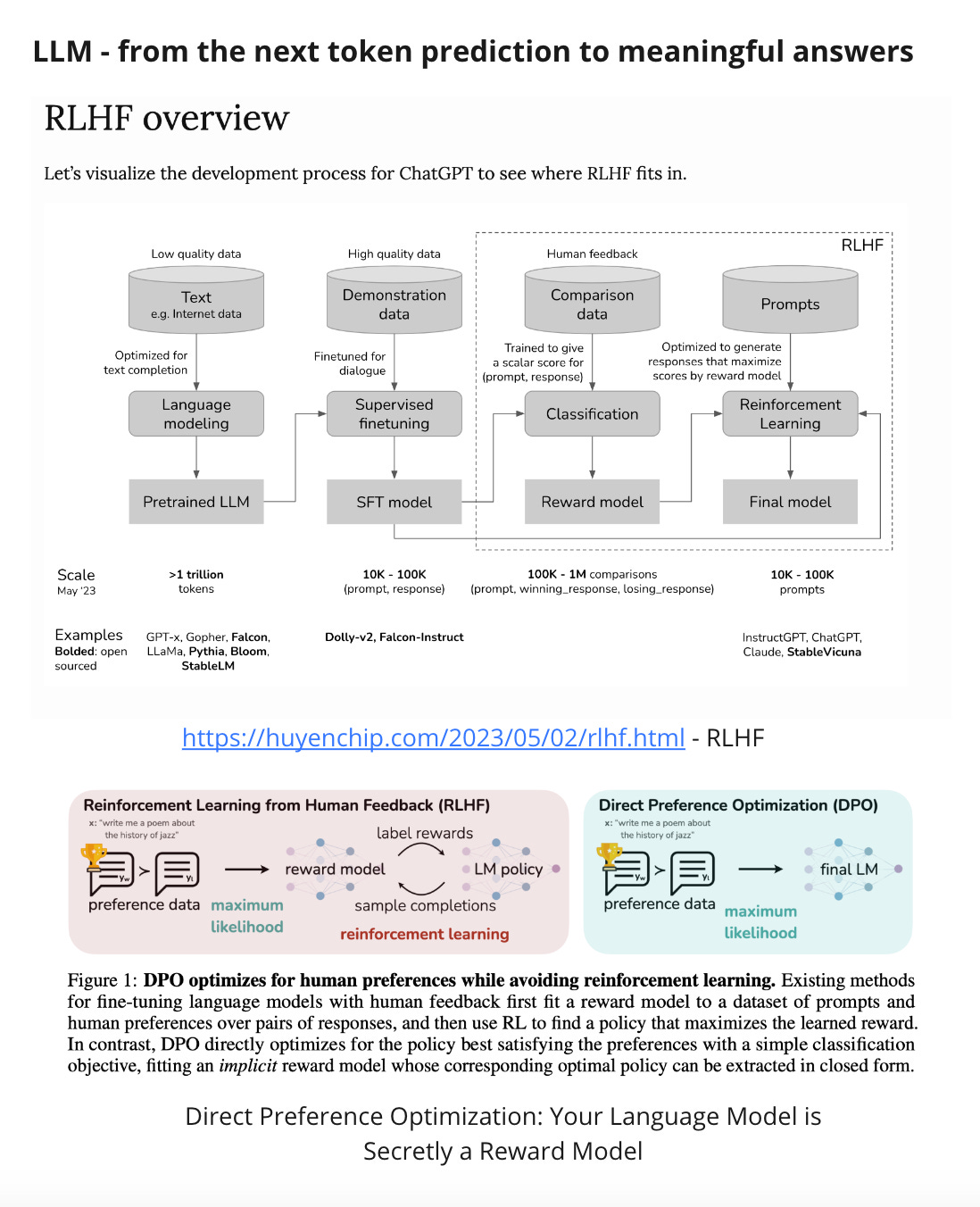

In a very simplified way, LLM is a model that predicts the next token. The path from the next token prediction to a meaningful answer has three steps: pre-training, SFT, and RLHF.

A pre-trained model is what we receive when we take Transformers or Mamba architecture and train it for the next token prediction. The result is a model that can finish a sentence but generate garbage after that. In fact, here, the model doesn't know when to stop generation. Mistral-7B-v0.1 is a good example of a pre-trained model. More than 90% of the training time is spent on this first step.

Once we have a pre-trained model, we can move to the next step, supervised fine-tuning(SFT). If, in the previous step, we used low-quality Internet-scale data, in this step, we need high-quality pairs of data - request and response. We provide the model with examples of expected tasks and answers. The training goal of the first and the second steps is the same - predict the next token. The difference is that we now need high-quality data that shows how the model will be used. In this step, the model will learn where to stop generating answers.

In theory, we might stop here and start using the model; in practice, the third step—reinforcement learning from human feedback—created LLMs as we know them today. The model knows how to generate a good-looking answer after the second step but knows little about how to generate an answer that people will like. In this step, we need high-quality data from experts. We show them several answers to the same question and ask experts to compare them. Once we've got up to a million answers from experts, we create an additional model - The reward Model. The reward model knows how to map an answer from the model to what people like; in other words, we predict human preferences. The reward model is now used to optimize an LLM after the SFT step.

After these three steps, the model generates meaningful answers. LLMs trained on all three steps have instruct or chat in the model name as Mistral-7B-Instruct-v0.1. A new development for the last step is Direct Preference Optimization (DPO). DPO doesn't require a reward model but directly optimizes LLM.

A good question is, what is an LLM? After each step, we receive a model called LLM. After the first step, the pre-trained model can be further fine-tuned on a specific task. Usually, the second and third steps united together as RLFH significantly improves SFT—this model can be used in chat applications and via an API. Only after the last step can the model be used in conjunction with RAG.

Another aspect is the opportunity to build a new model. We can focus on architectural elements, such as replacing Transformers, RLFH, or DPO. We can focus on creating our own very unique private dataset. Or we can think about improving results by tweaking the model for Human Feedback.

References:

https://towardsdatascience.com/supervised-fine-tuning-sft-with-large-language-models-0c7d66a26788 - Supervised Fine-Tuning (SFT) with Large Language Models

https://huggingface.co/blog/rishiraj/finetune-llms - Fine-Tuning LLMs: Supervised Fine-Tuning and Reward Modelling

https://arxiv.org/abs/2305.18290 - Direct Preference Optimization: Your Language Model is Secretly a Reward Model

https://huggingface.co/blog/rlhf - Illustrating Reinforcement Learning from Human Feedback (RLHF)

https://vijayasriiyer.medium.com/rlhf-training-pipeline-for-llms-using-huggingface-821b76fc45c4 - RLHF Training Pipeline for LLMs Using Huggingface

https://huyenchip.com/2023/05/02/rlhf.html - RLHF: Reinforcement Learning from Human Feedback

https://medium.com/@sinarya.114/d-p-o-vs-r-l-h-f-a-battle-for-fine-tuning-supremacy-in-language-models-04b273e7a173 - D.P.O vs R.L.H.F : A Battle for Fine-Tuning Supremacy in Language Models