Improving software quality with LLMs

One way to improve the quality of software products is to use assertions. An assertion is a special check in the code that must always be true. If the assertion is false, it means that an error has occurred, and the execution must be stopped. As runtime checks, assertions help prevent errors from spreading through the product. The only problem is that you must spend time adding them to the code and knowing where to put them efficiently.

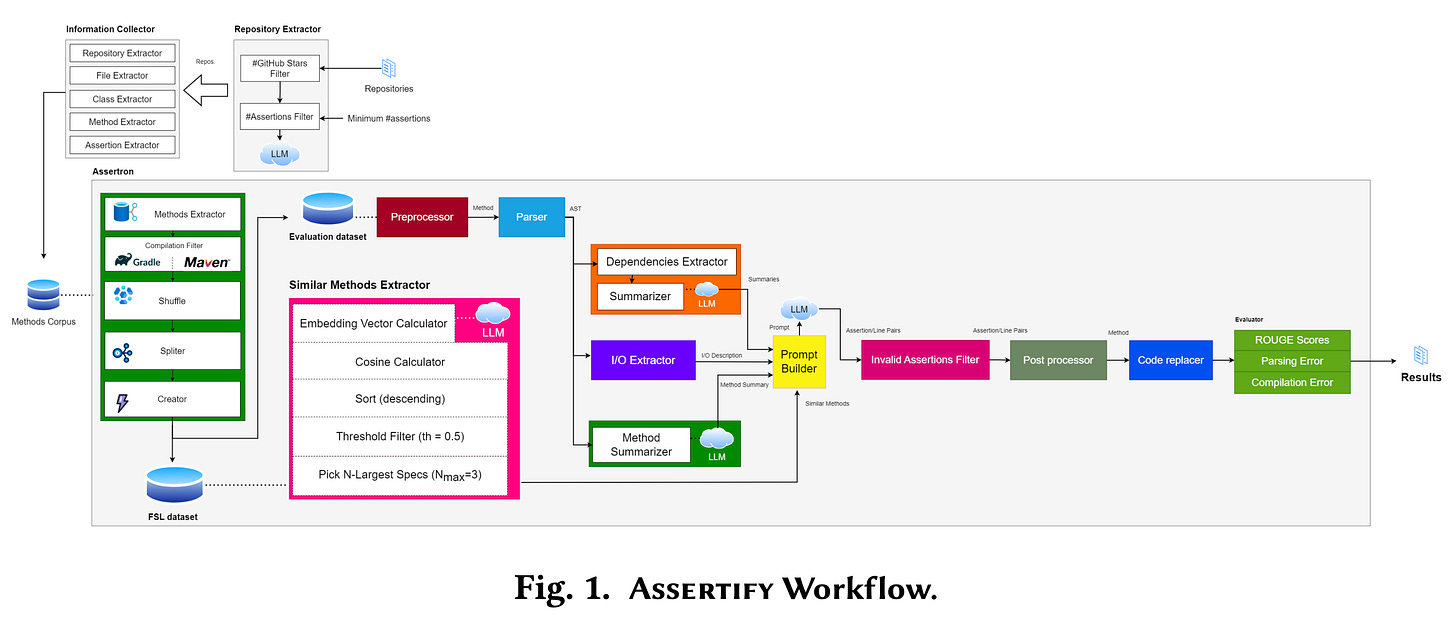

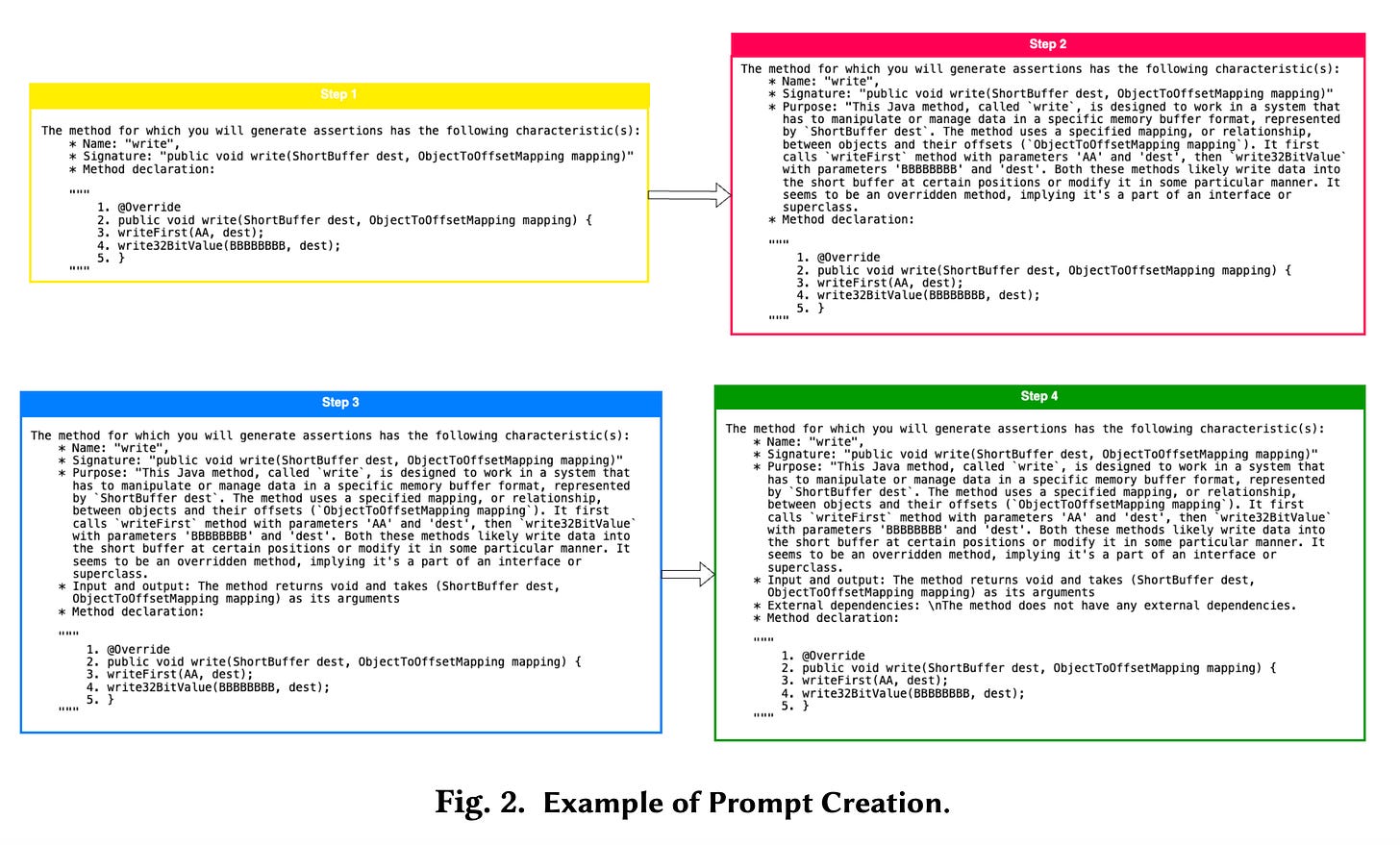

The ASSERTIFY paper focuses on automated solutions for adding assertions in the production code. The proposed approach is based on extracting contextual information and using a few-shot prompting technique. The generation starts from pre-processor, which removes all comments and assertions that can distract the model. The prompt builder adds the name of a method, signature, source code, description, inputs, outputs, external dependencies and similar methods to the prompt. To build such a detailed prompt, the builder needs additional modules: a similar method extractor(uses embeddings), a method summarizer (llm call), and a dependency extractor(llm call). The result of prompt execution is a rewritten method with new assertions. After that, the paper uses an evaluator which does semantics and syntaxis analysis, including duplication and compilation errors.

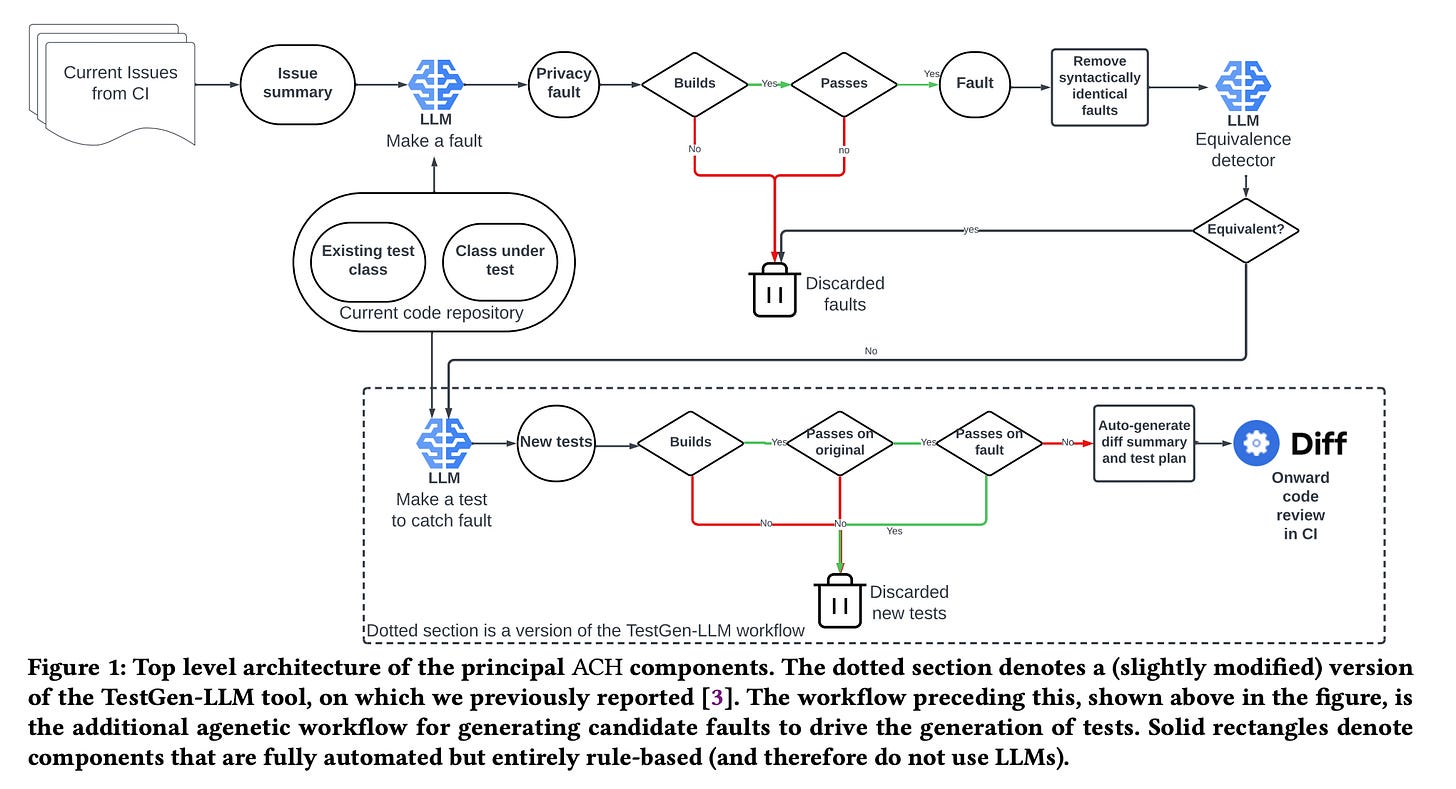

The Meta has shared a new paper on test generation - Mutation-Guided LLM-based Test Generation. Mutation testing is a method of testing software by introducing small changes in the source code(mutants). The idea is to focus on test case generation to find bugs before they reach production. The Meta has developed an agent which automatically improves quality by finding previously undetected issues. The difference with classical mutation-testing is that with the help of LLMs, we can focus on a specific set of problems and replace a simple rule-based engine with LLM reasoning. The execution result is a new set of test cases which 'hardening the platform against regressions'.

The agent focuses on privacy hardening. At first, it generates a mutant(a code change), which introduces a potential fault in the source code. To do this, the agent has access to previously reported privacy-related issues. Then, we check that the source code compiles, passes existing test cases and does not produce duplicate mutants. This ensures that the mutant is real and potentially improves quality if fixed. The next step is to generate a test case. The new test case is validated against the original source code(must pass) and a version with a mutant(must not pass). Internal testing showed that 73% of tests were accepted, and 36% were privacy-related.

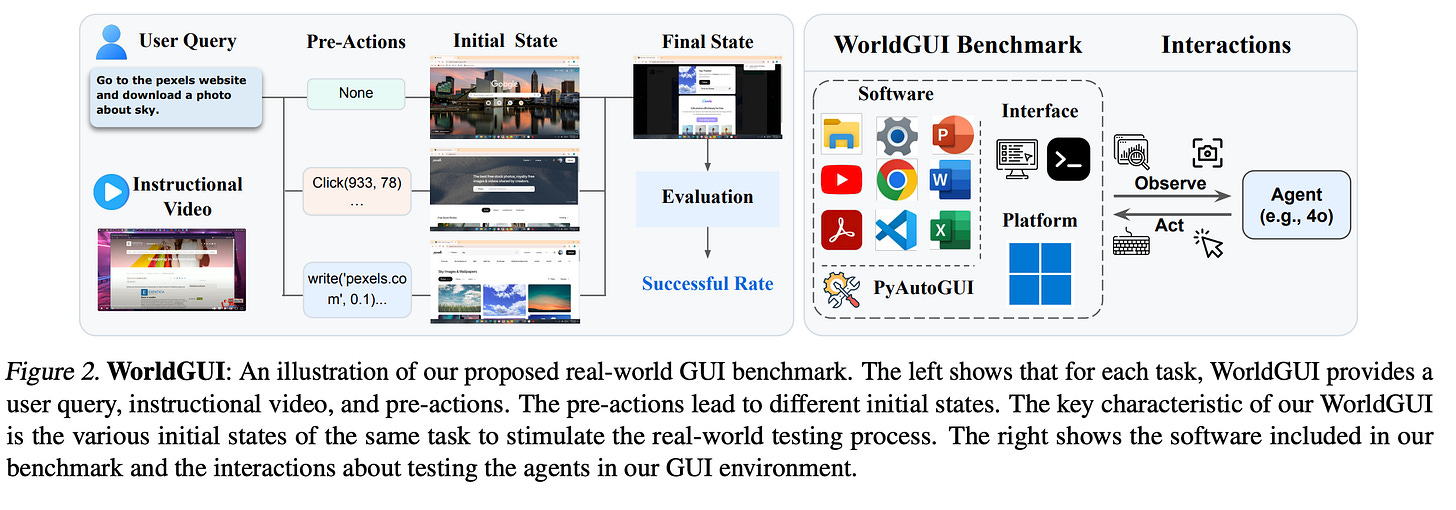

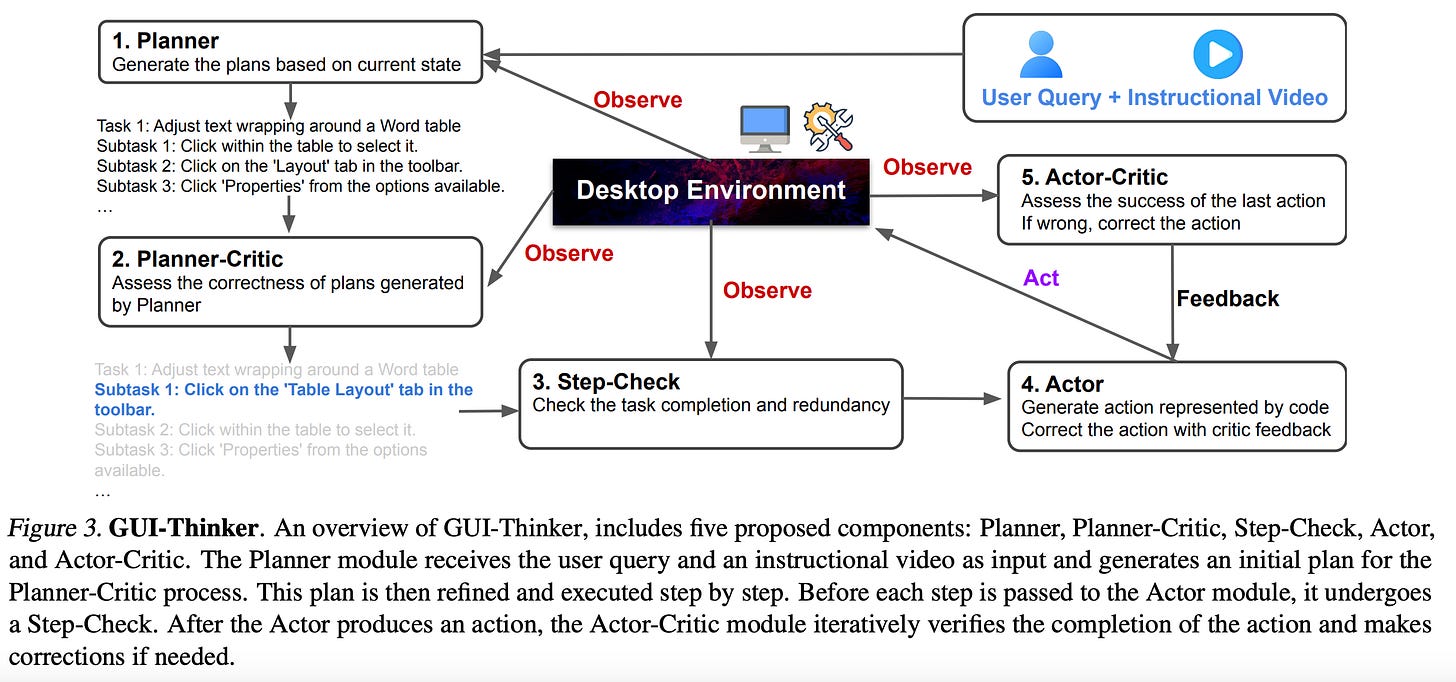

UI tests are among the most complex tests to support and develop. One solution is to use UI Agents to test UI. The problem is that UI Agents are not always reliable and fail to plan. The WorldGUI paper reviewed this problem. According to the authors, the issue of UI Agents lies in an initial state. In other words, when we test a system, we expect it to be at the exact state at the beginning of the test, which is super hard to achieve for UI tests. The solution is twofold. The first one is to develop a benchmark with dynamic initial states for evaluating GUI Agents - WorldGUI is a new benchmark. The second solution is to develop improved UI Agents - GUI-Thinker Framework. The framework introduces new steps post-planning, pre-execution and post-action. These steps add self-correction, validation of actions before execution and evaluation before switching to the next task. We teach the agent to be aware of the dynamic nature of UI.

Resources:

Paper: ASSERTIFY: Utilizing Large Language Models to Generate Assertions for Production Code - https://arxiv.org/abs/2411.16927

Paper: Mutation-Guided LLM-based Test Generation at Meta - https://arxiv.org/abs/2501.12862

Paper: WorldGUI: Dynamic Testing for Comprehensive Desktop GUI Automation - https://arxiv.org/abs/2502.08047