How to build a search assistant?

Finding information on the Internet might be a hard task, but LLM-based agents solve this problem. The recent success of Perplexity and OpenAI's plan to build a search product shows how important this is. The MindSearch paper describes how to build your own search assistant.

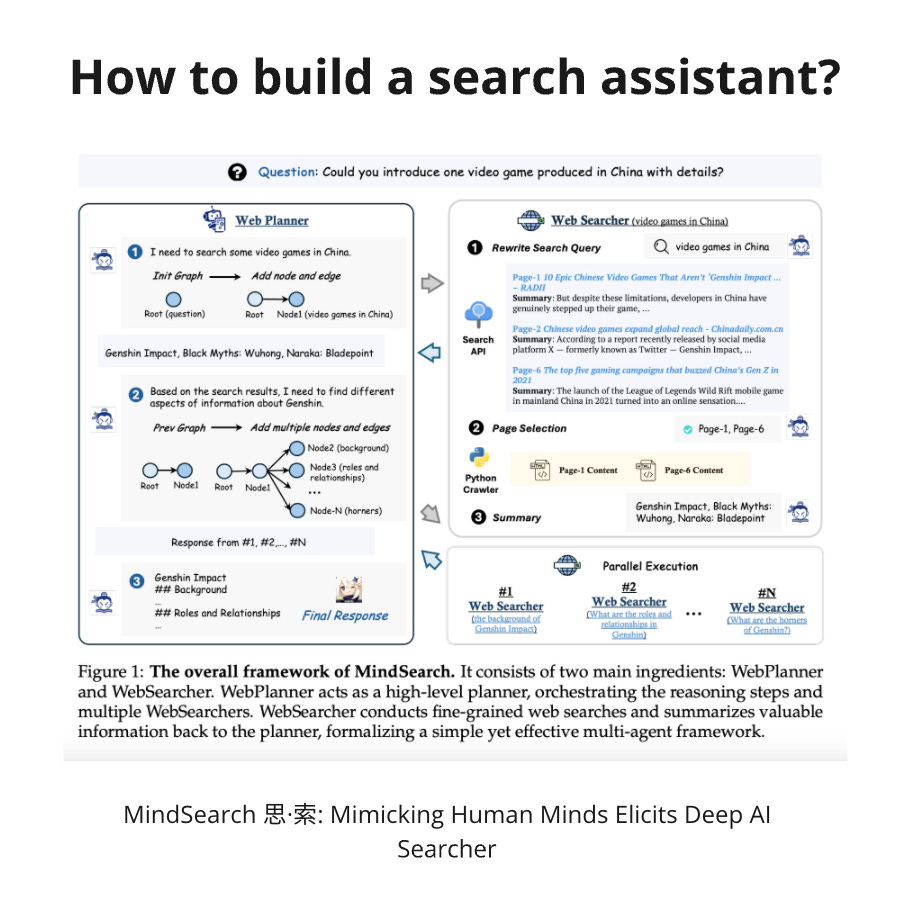

The MindSearch splits the search process into two parts - WebPlanner and WebSearcher. WebPlanner mimics the human brain during the search. WebPlanner splits the query into sub-questions and sends it to WebSearcher for execution. WebSearcher retrieves information from the web and returns the results to WebPlanner. The WebPlanner's job is to analyze the results and decide whether to return the result to the user or start a new round of searches.

WebSearcher receives a question from WebPlanner. WebSearcher rewrites the initial question to produce similar search queries, which are then sent to search engines such as Bing, Google, and DuckDuckGo. The next step is page selection. LLM is prompted to select the best-matching document to answer the original question. Only after the best page is selected does WebSearcher generate the answer to the original question and return execution to WebPlanner.

WebPlanner starts by decomposing the user's requests into questions. When WebSearcher returns an answer, WebPlanner analyzes it and decides on the next step. The next step can be another round of searches or generation of the answer to the user. WebPlanner tracks the progress in a graph (DAG). During execution, new nodes are added to the DAG. The interesting thing is that WebPlanner uses code execution to build the DAG. Basically, it asks LLM to generate code to update the DAG.

The results of MindSearch are pretty impressive. Due to parallel execution, it can process 300 web pages in 3 minutes, compared to 3 hours of human effort. According to human evaluators, MindSearch wins in ChatGPT-Web and Perplexity. Human evaluators are good but might be quite expensive. An alternative is to use simulation. USimAgent does exactly this.

The USimAgent paper describes how to build a solution to evaluate information retrieval systems with LLMs. USimAgent can send queries to the search engine, analyse results and execute clicks. The paper shows how to do multiple rounds of simulation. The result of each round is stored in the shared context. The reasoning process is built on top of the ReAct method. It's worth mentioning that the search simulation is very close to assistant functionality. We can add one more step at the end to summarize the shared content for the user.

The Language Agent Tree Search (LATS) paper shows how to improve the ReAct method for search. LATS consists of six operations - selection, expansion, evaluation, simulation, backpropagation and reflection. Basically, LATS adopts the Monte Carlo Tree Search algorithm. As a result, LATS outperforms ReAct in HotpotQA evaluation.

References:

https://arxiv.org/abs/2407.20183 - MindSearch: Mimicking Human Minds Elicits Deep AI Searcher

https://github.com/InternLM/MindSearch - An LLM-based Multi-agent Framework of Web Search Engine (like Perplexity.ai Pro and SearchGPT)

https://github.com/ItzCrazyKns/Perplexica - Perplexica is an AI-powered search engine. It is an Open source alternative to Perplexity AI

https://arxiv.org/abs/2310.04406 - Language Agent Tree Search Unifies Reasoning Acting and Planning in Language Models

https://arxiv.org/abs/2403.09142 - USimAgent: Large Language Models for Simulating Search Users