Game development in LLM-era

The games industry is worth 300B USD. To create a successful game, you need a great story, some code, and content(graphics, music, etc.). Narrative, code, and content are the main areas where LLMs can be super helpful.

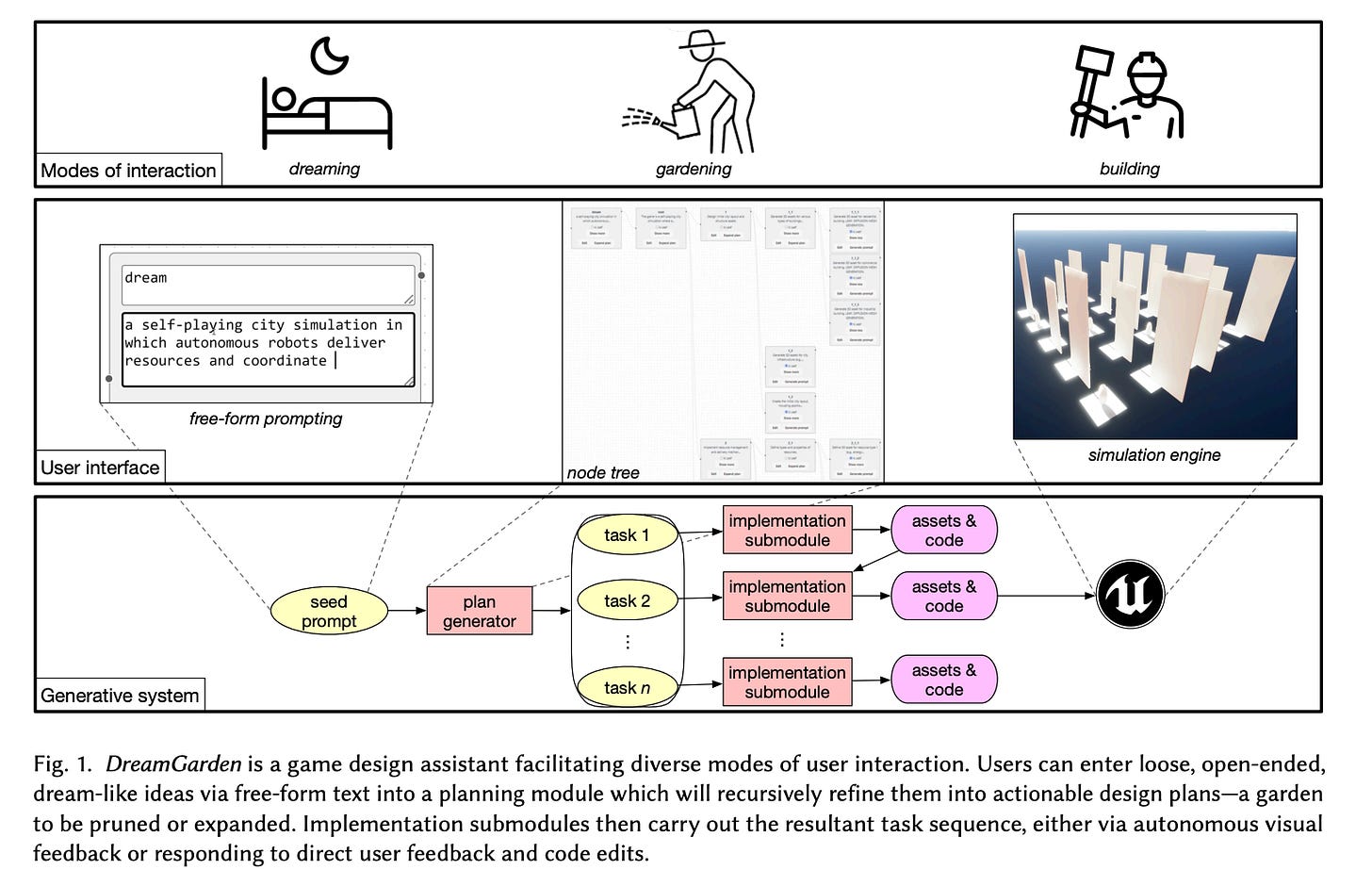

These days, almost all games are written on top of game engines such as Unreal Engine or Unity. This means that we can simplify a task and build a coding assistant for a specific engine instead of a generic one. The DreamGarden paper is an example of such an approach. It generates games from text prompts.

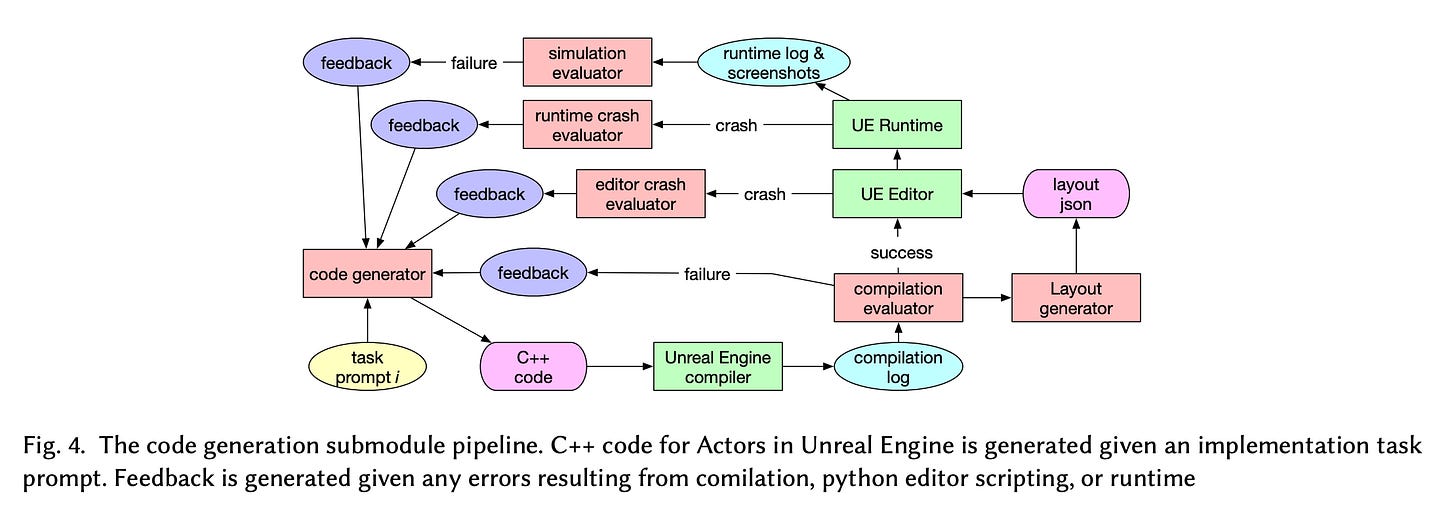

The DreamGarden splits the text prompt from a user into tasks by using a planner. The individual tasks are executed by implementation modules. The planner preppers a hierarchical plan tree. Each node is further split into sub-tasks by a component called - a sub-planner. The planning process works recursively and stops when the sub-planner marks each node as a leaf. The leaf is an input to a task generator, which is responsible for translating the leaf into a prompt for a specific implementation module. There are several implementation modules, such as code generator, mesh generator, mesh downloader etc. For instance, the code generator - generates C++ code and uses feedback from the Unreal Engine compiler, editor and runtime to improve and fix errors.

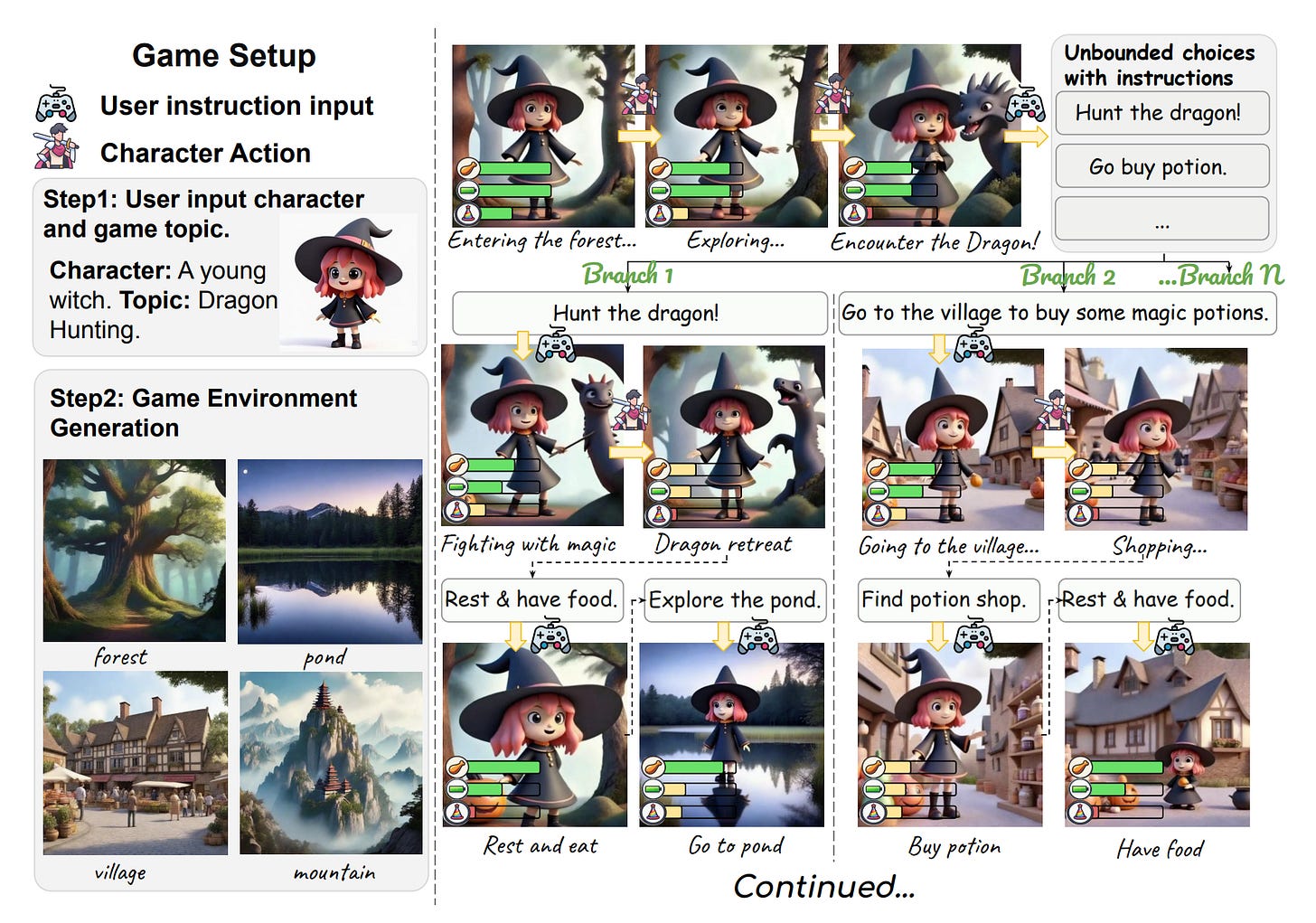

The Unbounded paper shows a different approach - no game engine is used, and it's built purely with LLMs. The paper focuses on generating an infinite game (a sandbox life simulation) like The Sims or Tamagotchi. A player interacts with the game by text prompts. The game generates a continuation of the story and graphics.

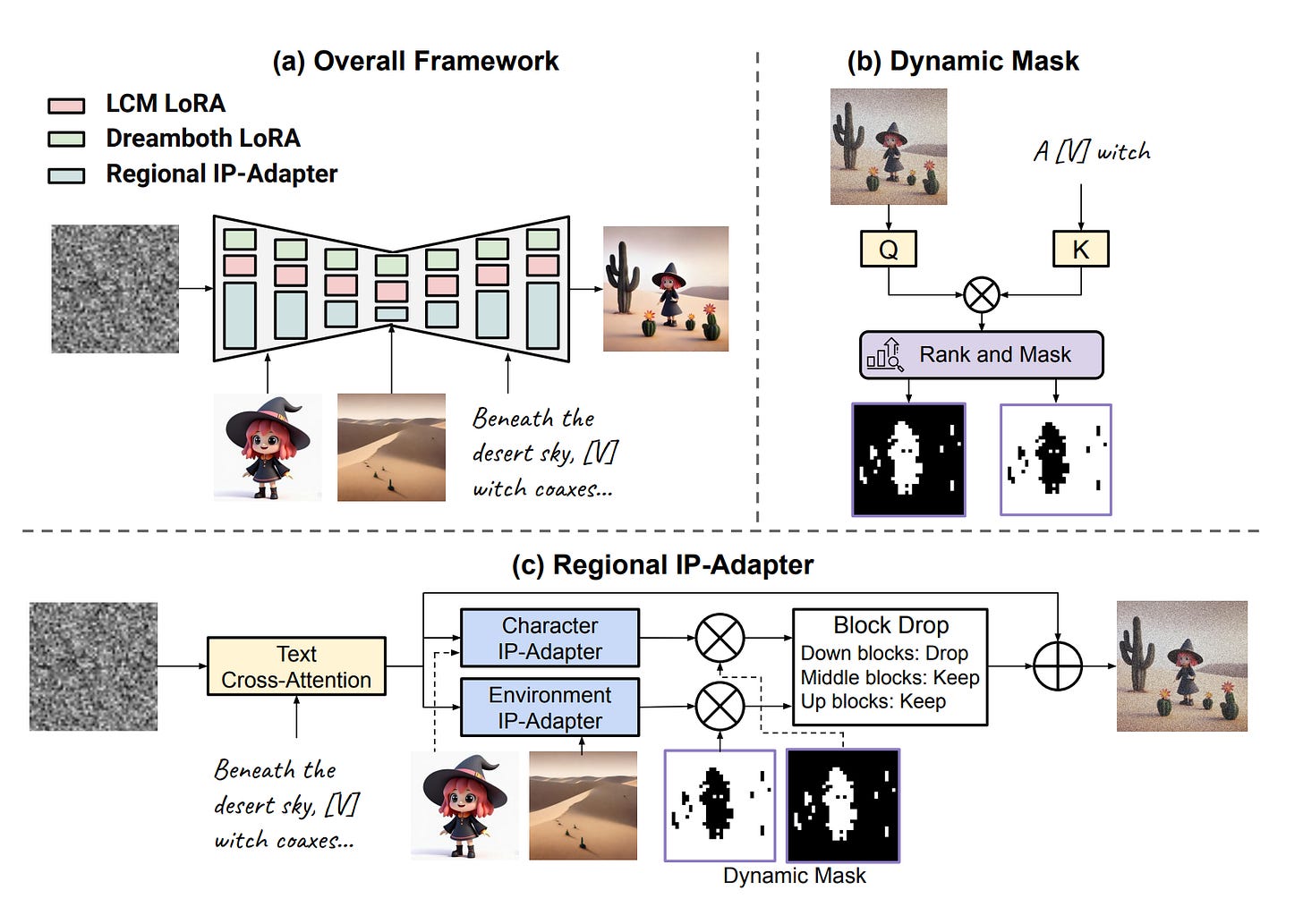

For image generation(environment) the paper uses latent consistency models, which provides real-time text-to-image generation. DreamBooth for consistent character generation and Regional IP-Adapter for embedding a character into an environment.

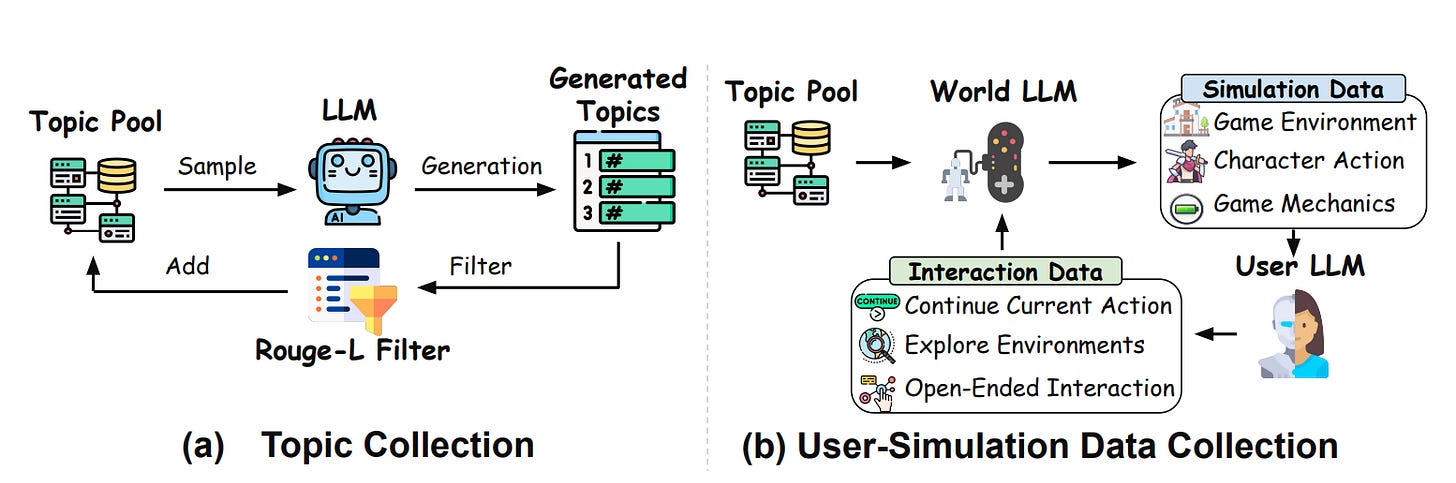

Big models such as GPT-4 can be used as game engines. These models have common knowledge of the world and can predict the response to the user action quite well, so they can be used for game world modelling. The problem is that big models take a lot of time to respond. The Unbounded paper solves this by fine-tuning a small language model - Gemma-2B. Two AI agents were used to prepare test data: User LLM and World Simulation LLM. The User LLM mimics users' behaviour by providing interaction inputs. The World Simulation LLM receives inputs, generates responses, and maintains the state of the game.

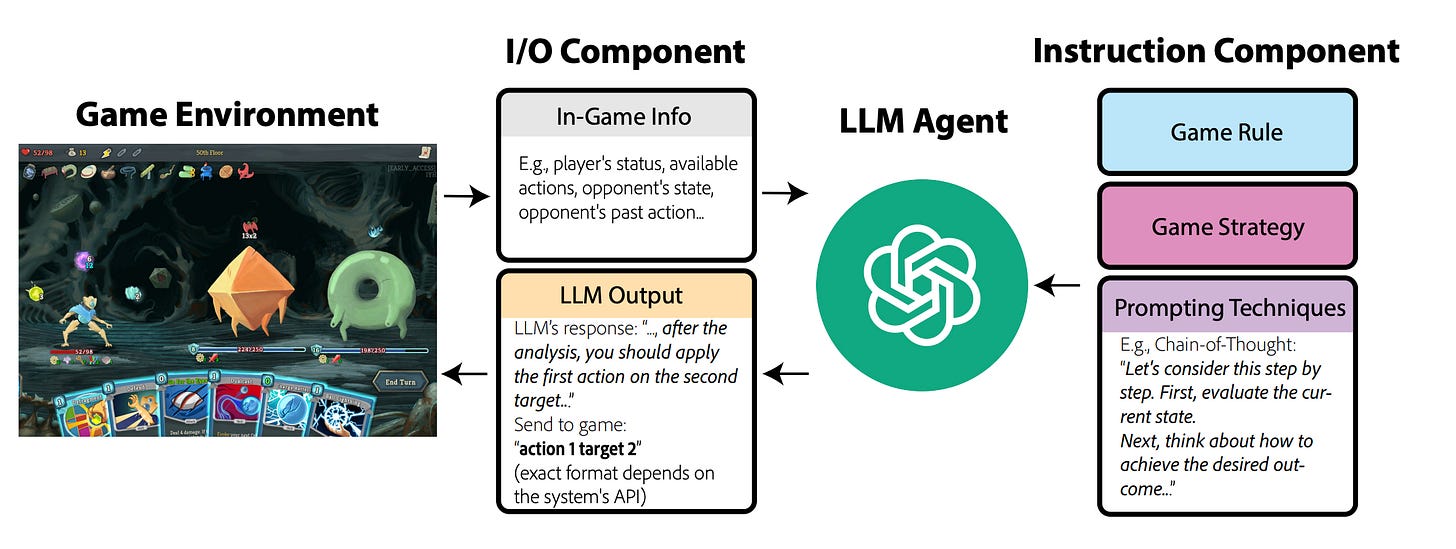

In the previous paper, the LLM-as-a-Judge approach was used to evaluate the quality of training data or, in other words - the quality of game modelling. However, LLMs can be used as a proxy for real players. In the 'LLMs May Not Be Human-Level Players, But They Can Be Testers: Measuring Game Difficulty with LLM Agents' paper, the focus is on game difficulty, which is an essential factor and can lead to dissatisfaction for players when a game is poorly balanced. The LLM agent in the paper uses Chain-of-Thought prompting combined with game rules and strategy to react to the game state. It receives input in In-Game Info as a text and responds with actions that must be executed. By measuring the LLM agent's progress in the game as a number of health points or several solved puzzles, we can build game difficulty curves and understand how well the game is balanced.

Resources:

https://arxiv.org/abs/2410.01791 - DreamGarden: A Designer Assistant for Growing Games from a Single Prompt

https://arxiv.org/abs/2410.18975 - Unbounded: A Generative Infinite Game of Character Life Simulation

https://arxiv.org/abs/2410.02829 - LLMs May Not Be Human-Level Players, But They Can Be Testers: Measuring Game Difficulty with LLM Agents